Work & Personal Projects

- All

- Blog

- Case Study

- Data Visualization

- Machine Learning

- Neural Networks

A Deep Generative Model for Stellar Spectra: A Case Study in Machine Learning

My Journey in Health Tech: Building Secure Healthcare Solutions in Barrie with metricHEALTH

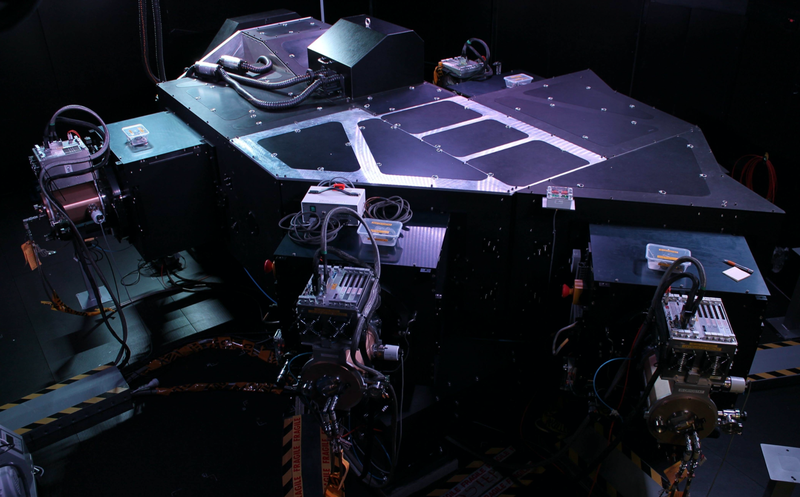

CarePal: Developing an Award-Winning AI Companion at the Georgian College Hackathon

AutoML vs. Deep Learning: A Case Study in Forecasting Iowa’s Liquor Sales

Case Study: How does a bike-share navigate speedy success?

Case Study: A Century of Natural Disasters: Unveiling the Global Impact, Trends, and Response

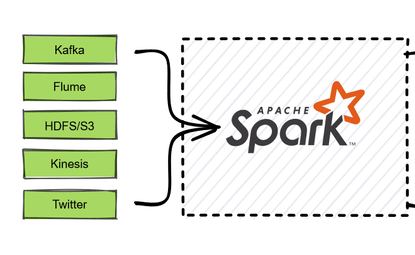

Real-Time ETL : Apache Spark and Kafka Enterprise Data Processing

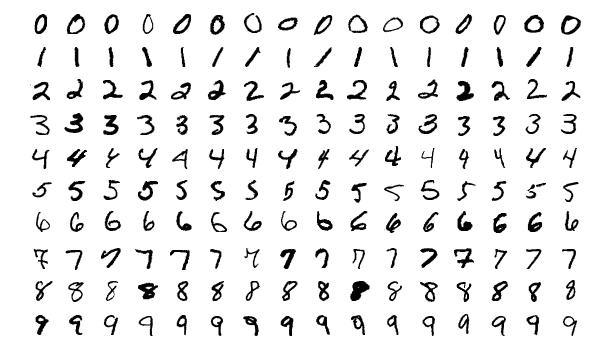

Creating Neural Networks from Scratch Using Numpy and Math